Theta Network and AWS Join Forces to Launch Custom Amazon AI Chips Trainium & Inferentia on…

0

0

Theta Network and AWS Join Forces to Launch Custom Amazon AI Chips Trainium & Inferentia on EdgeCloud

Theta and AWS have spent the past several years collaborating on multiple technical fronts and over the last six months, the two teams have worked in close collaboration to seamlessly integrate their platforms. Recognizing Theta EdgeCloud Hybrid as the de facto leader in decentralized cloud infrastructure, trusted by dozens of global academic institutions, professional sports franchises, and top-tier esports organizations, AWS has taken a major step forward.

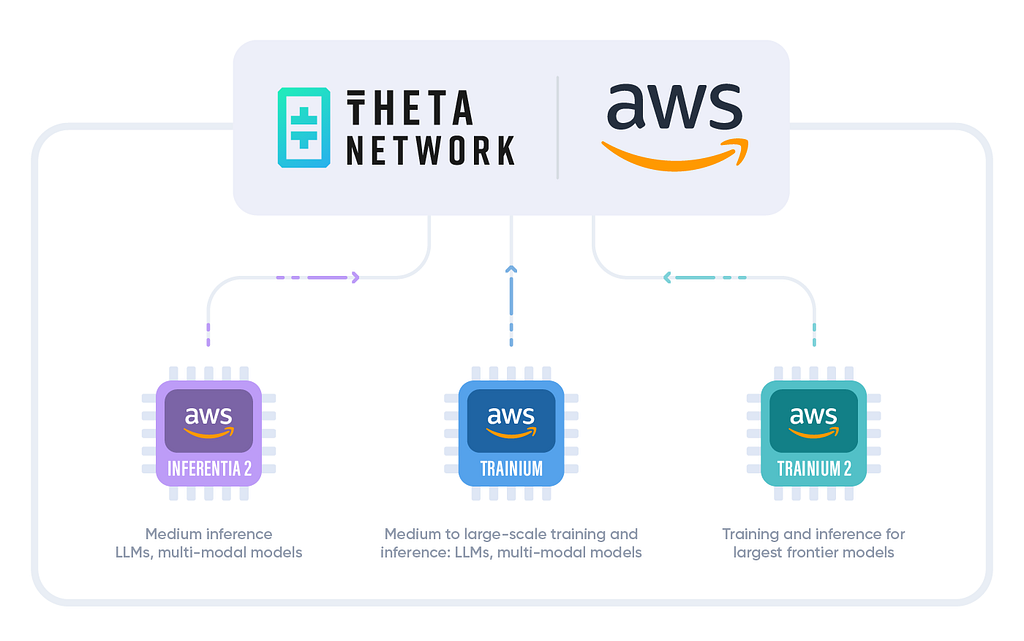

For the first time, AWS has approved a decentralized platform to integrate its cutting-edge AI silicon: AWS Trainium and Inferentia EC2 instances. This milestone makes Theta the first blockchain network to deploy Amazon’s next-generation chipsets, delivering unmatched performance for AI, video and media workloads. Combined with over 30,000 NVIDIA GPUs contributed by the Theta community, EdgeCloud Hybrid now offers a globally distributed compute layer with unprecedented performance-to-cost efficiency — marking a breakthrough moment in the evolution of cloud services. Secured by THETA blockchain and powered by TFUEL payments, global customers can now access the best of AWS compute and Theta’s decentralized GPU marketplace, all through a single integrated EdgeCloud user interface.

For years, the AI and media landscape has relied on a single hardware provider — Nvidia. While Nvidia GPUs have driven impressive progress, the growing demand for AI and video compute has made access more competitive and costly. To leapfrog cloud based alternatives, Theta EdgeCloud Hybrid is expanding its availability and embracing a diversified compute infrastructure, bringing AWS custom silicon on board.

In many AI workloads, AWS Trainium and Inferentia not only rival but exceed the performance of alternative platforms at a fraction of the operational cost:

- On GPT-4 class models for large text generation, Trainium is competitive with Nvidia A100 GPUs at ~54% lower cost per token than an A100 cluster at similar throughput.

- For Llama 2/3 mid-size LLM, Trainium + Inferentia deliver up to 3x higher inference throughput compared to other GPU baselines.

- On Stable Diffusion XL image generation, Stability AI recently partnered with AWS to use Amazon SageMaker with Trainium for model training, achieving 58% lower training time and cost compared to their previous infrastructure.

- For Whisper 2 speech-to-text and translation, Trainium can achieve low latency transcription when the model is compiled to run in a streaming fashion on NeuronCores. With 96 GB memory per Trainium chip, large Whisper models can run without overflow, ensuring stable performance.

Theta EdgeCloud Hybrid — The First Decentralized Platform to integrate AWS Trainium and Inferentia

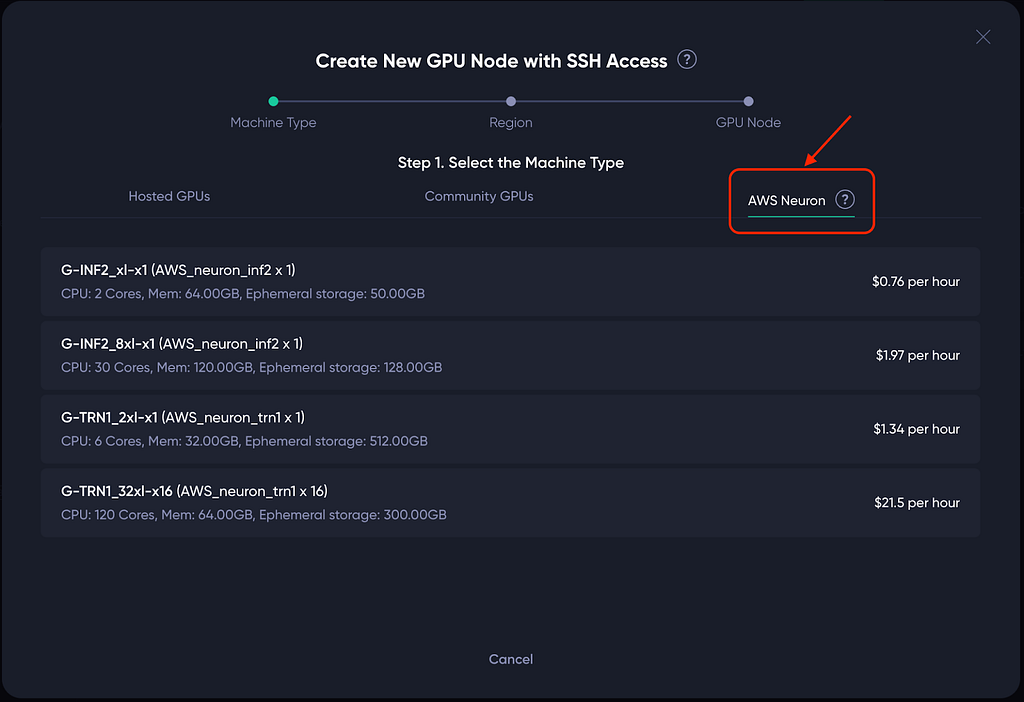

EdgeCloud is now the first 3rd-party decentralized platform to adopt AWS’s purpose-built AI instances, powered by AWS Trainium and Inferentia. These instances offer high performance at the lowest cost for training and inference at scale.

Trainium 1st Gen (Trn1) Instances : Powered by AWS Trainium chips, Trn1 instances are purpose built for high-performance deep learning training while offering up to 50% cost-to-train savings over comparable Amazon EC2 instances.

Trainium 2nd Gen (Trn2) Instances : Powered by AWS Trainium2 chips, Trn2 instances are purpose built for high-performance generative AI training and inference of models with hundreds of billions to trillion+ parameters.

Inferentia 2nd Gen (Inf2) Instances : Powered by second-generation AWS Inferentia chip, built for deep learning inference. Deliver high performance at the lowest cost in Amazon EC2 for generative artificial intelligence models, including large language models and vision transformers. Inf2 offers 3x higher compute performance, 4x higher accelerator memory, up to 4x higher throughput, and up to 10x lower latency compared to Inf1 instances.

With AWS AI Chips Onboard, Theta EdgeCloud Ushers in New Era of Decentralized Cloud Infrastructure

With this new addition, enterprises, researchers, and developers now have greater flexibility to select the best resource for their specific workloads. Theta EdgeCloud Hybrid now provides seamless access to a wide range of compute resources, including AWS AI instances, NVIDIA’s top-tier GPUs, and globally distributed edge nodes run by Theta community. This broad range of options sets EdgeCloud Hybrid apart as the only platform combining centralized performance with decentralized scale.

High-Performance Cloud GPUs:

EdgeCloud now offers access to top-tier cloud resources, including Nvidia H100s and A100s, and now with AWS Trainium and Inferentia — cutting edge chips optimized for high-throughput AI model training and inference tasks. This delivers the performance required to support today’s most demanding generative AI training and inference of models with hundreds of billions parameters.

Decentralized Global Community Edge GPUs:

EdgeCloud Hybrid is supported by a global network of over 30,000 community-run edge nodes, equipped with NVIDIA RTX 3000s, 4000s and the latest 5000-series GPUs. These decentralized nodes offer highly cost-effective compute capacity for small-to-medium scale training, and inference tasks optimized at the edge closest to end users.

By combining high-performance cloud GPUs with a globally distributed network of edge nodes, Theta EdgeCloud Hybrid sets a new standard for AI infrastructure — delivering the best of both worlds in performance, and accessibility.

What This Means for the Theta Community, and Why It Matters

The integration of AWS Trainium and Inferentia into EdgeCloud Hybrid platform marks a major leap forward for the Theta community. The platform is secured by THETA blockchain and powered by TFUEL payments, and global customers can now access the best of AWS compute and a decentralized GPU marketplace, all through a single integrated user interface.

Whether customers are training billion-parameter language models or deploying the fastest GenAI chatbots, these new instances make it possible to deliver the performance needed at reduced cost. And because Amazon Trn1, Trn2, and Inf2 nodes are now available directly through the EdgeCloud Hybrid interface, they can be launched just as easily as other GPU instances. All paid with TFUEL. No changes to existing workflow.

This milestone comes at a critical time. As AI models grow larger and inference becomes more demanding, the need for diverse, high-performance, and cost-effective infrastructure is urgent. Theta EdgeCloud Hybrid is uniquely designed to meet this challenge — combining high-powered cloud GPUs from AWS and NVIDIA with a globally distributed network of over 30,000 edge nodes run by you, our community.

Theta Network and AWS Join Forces to Launch Custom Amazon AI Chips Trainium & Inferentia on… was originally published in Theta Network on Medium, where people are continuing the conversation by highlighting and responding to this story.

0

0

Manage all your crypto, NFT and DeFi from one place

Manage all your crypto, NFT and DeFi from one placeSecurely connect the portfolio you’re using to start.