Bringing remote MCP Servers into the Agentverse Ecosystem, discoverable by ASI-One LLM

0

0

As AI assistants become increasingly capable, the ability to extend their functionality with real-world data and services is essential. In this article, I’ll share how I integrated Model Context Protocol (MCP) with Fetch.ai’s agent ecosystem to build an Airbnb assistant that can be discovered by ASI:One LLM through Agentverse.

Understanding the Components

🌍 Fetch.ai Ecosystem

Fetch.ai offers a powerful platform for creating, deploying, and connecting autonomous agents:

- uAgents: A lightweight framework for building agents with built-in identity, messaging, and protocol support

- Agentverse: An open marketplace where agents can be registered, discovered, and utilized

- ASI:One LLM: Fetch.ai’s Web3-native LLM designed specifically for agentic workflows, capable of discovering agents on Agentverse

- Chat Protocol: A standardized communication protocol for conversational interactions between agents

🔌 Model Context Protocol (MCP)

Model Context Protocol is an open standard created by Anthropic that provides a consistent way for AI models to interact with external tools and services:

- Standardizes how AI systems access external capabilities

- Eliminates the need for custom integrations for each service

- Enables dynamic tool discovery and calling

- Supports various transport methods (stdio, HTTP, SSE)

🤔 Why Connect MCP Servers to Agentverse?

The magic happens when you combine these technologies:

- MCP servers provide standardized access to external APIs and data sources

- Agents on Agentverse implement the Chat Protocol to handle conversations

- ASI:One LLM can discover these agents and use them to fulfill user requests

This integration enables ASI:One to access a wide range of capabilities beyond its built-in knowledge, from searching Airbnb listings to querying specialized databases or accessing productivity tools.

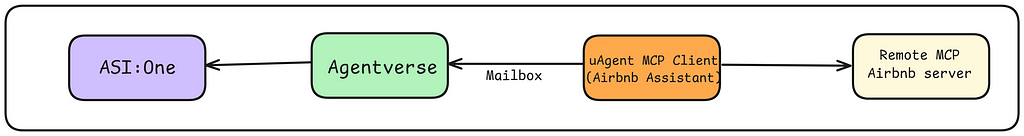

System Architecture

The architecture connects several key components:

- ASI:One LLM: Processes user requests and discovers relevant agents on Agentverse

- Agentverse: Serves as the registry and marketplace for agent discovery

- Agent with Chat Protocol: Receives requests and implements the Fetch.ai Chat Protocol

- MCP Client: Connects to MCP servers to access external services

- MCP Server: Provides standardized access to external APIs (like Airbnb)

- External Service: The actual data source or API being accessed

This modular approach allows ASI:One to dynamically discover and utilize agents that provide access to specific services through MCP.

Step-by-Step Process

Let’s break down how to bring any MCP server to Agentverse, making it discoverable by ASI:One. I’ll use my Airbnb agent as an example.

1. Understanding Your MCP Server

First, identify which MCP server you want to work with. In my case, I used the Airbnb MCP server which provides tools for searching listings and getting listing details.

MCP servers expose their capabilities as “tools” with defined input parameters and output formats. For example, the Airbnb MCP server provides:

- airbnb_search: Search for listings with parameters like location, checkin/checkout dates, number of guests

- airbnb_listing_details: Get details for a specific listing by ID

You can explore available MCP servers on platforms like Smithery.ai or build your own using the MCP specification.

2. Setting Up the MCP Client

To connect to an MCP server, you need to create an MCP client. Here’s a simplified example for the Airbnb MCP server:

from contextlib import AsyncExitStack

from mcp import ClientSession, StdioServerParameters

from mcp.client.stdio import stdio_client

async def connect_to_mcp_server():

"""Connect to the Airbnb MCP server"""

exit_stack = AsyncExitStack()

# Configure server parameters

server_params = StdioServerParameters(

command="npx",

args=["-y", "@openbnb/mcp-server-airbnb", "--ignore-robots-txt"],

env={}

)

# Connect to the server

stdio_transport = await exit_stack.enter_async_context(stdio_client(server_params))

stdio, write = stdio_transport

session = await exit_stack.enter_async_context(ClientSession(stdio, write))

# Initialize the session

await session.initialize()

# List available tools

response = await session.list_tools()

tools = response.tools

return session, tools, exit_stack

This sets up a connection to the Airbnb MCP server using the stdio transport method. You can adapt this for different servers by changing the command and arguments.

3. Creating Wrapper Functions

Next, create wrapper functions for the MCP tools you want to use. These functions make it easier to call the tools and process their responses:

async def search_airbnb_listings(session, location: str, **kwargs):

"""Search for Airbnb listings"""

# Prepare parameters

params = {"location": location, **kwargs}

# Call the airbnb_search tool

result = await session.call_tool("airbnb_search", params)

# Process the response

# Extract listings from result.content

# Format them for readability

return {

"success": True,

"formatted_output": formatted_output,

"listings": simplified_listings

}

These wrapper functions handle the details of calling the MCP tools and transforming the raw responses into more user-friendly formats.

4. Implementing the Chat Protocol

To make your agent discoverable by ASI-1, you need to implement Fetch.ai’s Chat Protocol. This requires setting up message handlers for chat interactions. To enable real-time messaging, the agent implements two core protocol handlers:

- 📥 ChatMessage: For receiving queries and sending responses

- 📤 ChatAcknowledgement: To confirm message receipt

These enable seamless communication between agents and users on the ASI:One platform. You can read more about the Chat Protocol here.

@chat_proto.on_message(model=ChatMessage)

async def handle_chat_message(ctx, sender, msg):

...

await ctx.send(sender, ChatAcknowledgement(...))

The Chat Protocol enables your agent to receive messages from ASI-1, process them, and send responses back in a standardized format.

5. Natural Language Understanding

For my agent to understand natural language requests, I used an AI-powered structured output approach:

async def extract_airbnb_request(text: str):

"""Extract intent and parameters from user text using AI"""

# Create a prompt template

prompt = f"""

Extract Airbnb request information from: "{text}"

The user wants to get Airbnb information. Extract:

1. Request type (search or details)

2. Parameters (location, dates, etc.)

"""

# Send to AI for parsing

# Return structured request

This allows the agent to convert natural language queries into structured parameters for the MCP tools.

6. Creating and Registering the Agent

Finally, create a uAgent and register it on Agentverse: Make sure to click on the local inspector link and connect it to mailbox

from uagents import Agent# Create the agent

agent = Agent(

name="mcp_service_agent",

port=8004,

mailbox=True # Enable discovery

)

# Include the Chat Protocol

agent.include(chat_proto, publish_manifest=True)

# Initialize MCP connection on startup

@agent.on_event("startup")

async def on_startup(ctx: Context):

"""Connect to MCP server on startup"""

global mcp_session, mcp_exit_stack, mcp_tools

# Connect to MCP server

mcp_session, mcp_tools, mcp_exit_stack = await connect_to_mcp_server()

ctx.logger.info(f"Connected to MCP server with {len(mcp_tools)} tools")

# Clean up on shutdown

@agent.on_event("shutdown")

async def on_shutdown(ctx: Context):

"""Clean up MCP connection on shutdown"""

global mcp_exit_stack

if mcp_exit_stack:

await mcp_exit_stack.aclose()

ctx.logger.info("MCP connection closed")\

# Run the agent

if __name__ == "__main__":

agent.run()

When you run this agent, it will:

- Connect to the MCP server on startup

- Register itself on Agentverse with the Chat Protocol

- Be discoverable by ASI-1 LLM

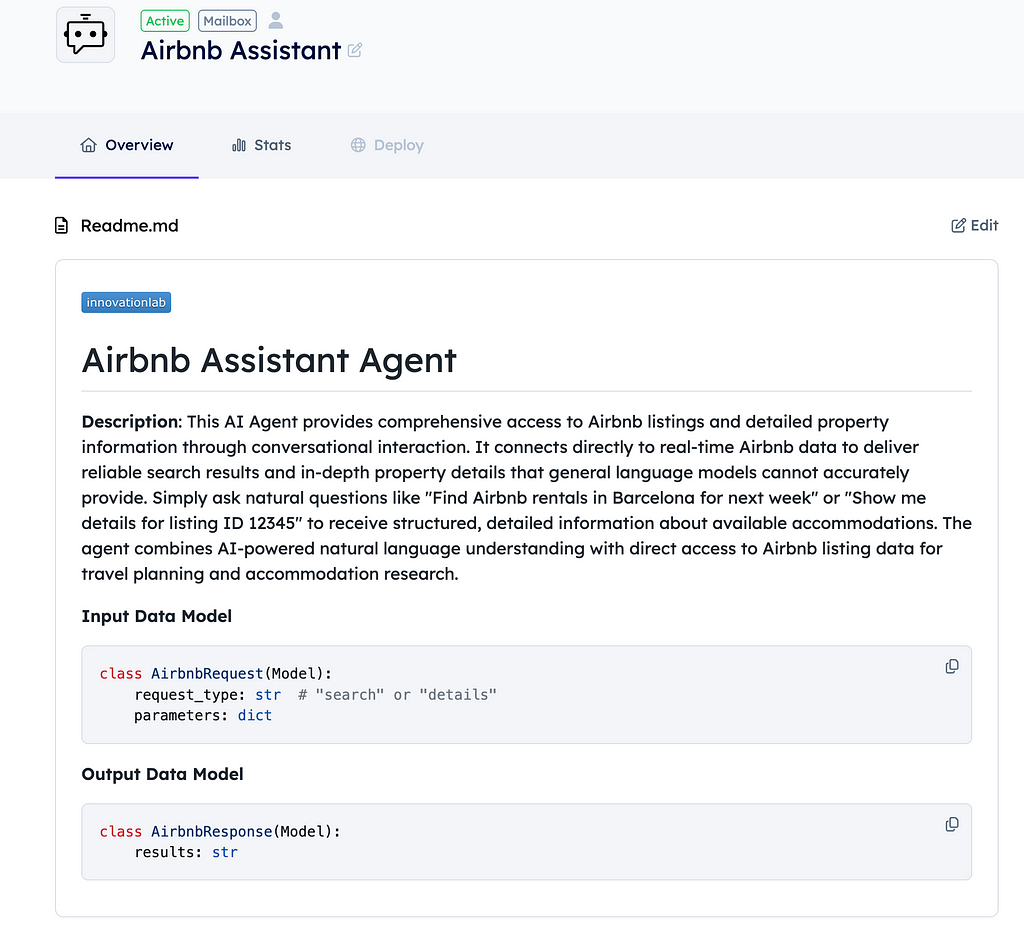

7. Discoverable on Agentverse & ASI:One

To make your agent discoverable on Agentverse and accessible through the ASI:One LLM make sure to add the agent README.md in the Overview tab of your agent.

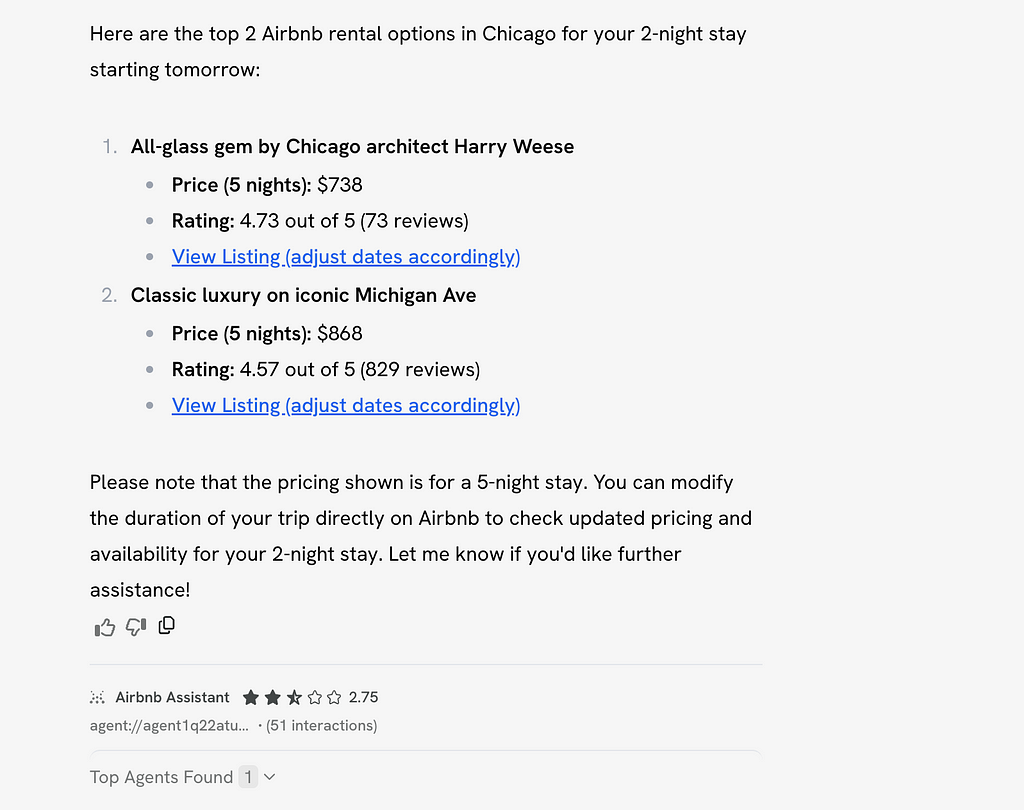

8. Query your Agent from ASI:One LLM

Login to the ASI:One and toggle the “Agents” switch to enable ASI:One to connect with Agents on Agentverse.

Query : “Find me two airbnb rentals in chicago for 2 nights starting tomorrow”

The Complete Flow

When a user asks ASI-1 “Find me an Airbnb in San Francisco for next weekend,” the following happens:

- User query → The user asks ASI-1 about Airbnb accommodations

- Agent discovery → ASI-1 searches Agentverse and finds our Airbnb agent

- Request processing → Our agent extracts the intent (search) and parameters (location, dates)

- MCP tool call → The agent calls the airbnb_search tool with the parameters

- External service access → The MCP server retrieves real-time listings from Airbnb

- Response formatting → Our agent formats the results into a user-friendly message

- Result delivery → The response is sent back through Agentverse to ASI-1 to the user

This entire flow happens seamlessly, allowing ASI-1 to access real-time Airbnb data without leaving the conversation.

Extending to Other Services

The beauty of this approach is how easily it can be applied to other services:

- Replace the MCP server with one for your target service (GitHub, Gmail, etc.)

server_params = StdioServerParameters(

command="npx",

args=["-y", "@your-mcp-server-package"],

env={}

)

2. Update your wrapper functions to call the specific tools provided by your MCP server

3. Update the intent extraction for your service’s specific parameters

4. Register on Agentverse using the same pattern

The core architecture and approach remain the same regardless of the service you’re connecting to.

Conclusion

By combining Model Context Protocol with Fetch.ai’s agent ecosystem, we’ve created a powerful pattern for extending ASI:One’s capabilities with real-world services. This approach:

- Makes external APIs accessible through natural language

- Leverages standardized protocols for seamless integration

- Enables dynamic discovery of specialized capabilities

- Creates a more powerful and versatile AI assistant ecosystem

As more MCP servers become available and the Agentverse continues to grow, we’ll see increasingly sophisticated agents that can seamlessly collaborate to solve complex problems and provide real utility to users.

The full code for this project is available on GitHub if you’d like to explore the implementation details or adapt it for your own use case.

Bringing remote MCP Servers into the Agentverse Ecosystem, discoverable by ASI-One LLM was originally published in Fetch.ai on Medium, where people are continuing the conversation by highlighting and responding to this story.

0

0

Manage all your crypto, NFT and DeFi from one place

Manage all your crypto, NFT and DeFi from one placeSecurely connect the portfolio you’re using to start.