EdgeCloud: Taking genAI to the Next Level with AI Model Pipelining

1

0

The concept of software workflow has been around for decades, in many cases, to automate the step-by-step manual labor often found in enterprise supply chains and front-office productivity applications. Leading into the launch of EdgeCloud, the Theta engineering team has been researching generative AI model pipelines, which are essentially end-to-end constructs that orchestrate data flow into, and output from a set of AI models.

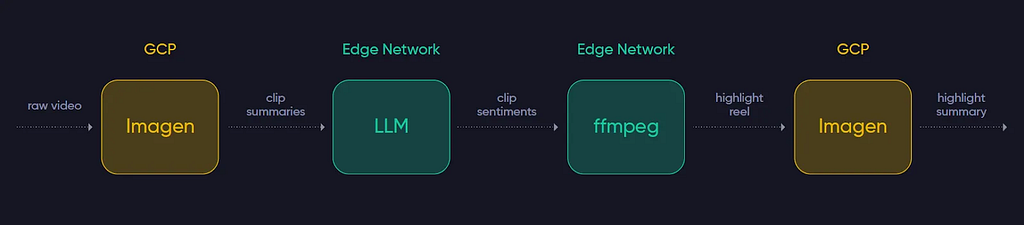

Theta first presented this concept last year at the GoogleCloud Next conference for video-to-text applications utilizing Google’s Imagen on Vertex AI platform and multiple EdgeCloud text-to-text LLM and video encoding models. The diagram below shows an example of an esports game highlight reel generation with a four model pipeline including Google’s Imagen.

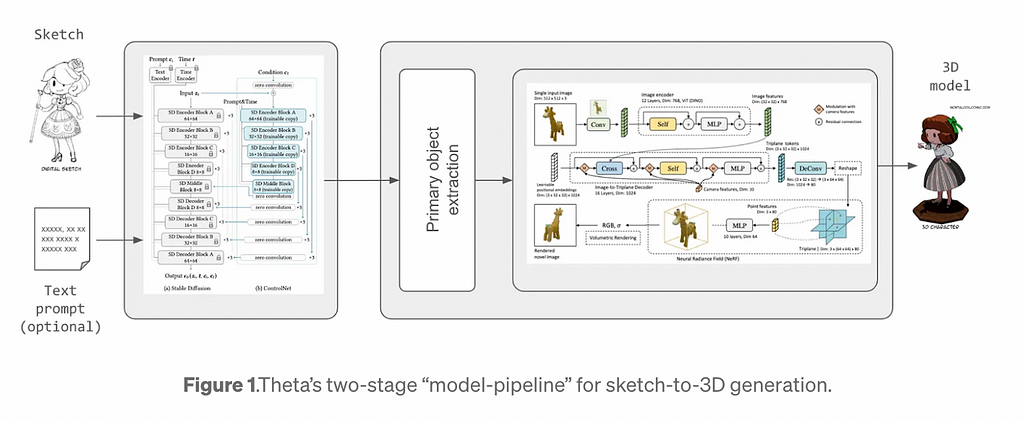

Extending on this concept, last week Theta announced its proprietary sketch-to-3D generative AI model implemented as a two stage model pipeline with a text/sketch to 2.5D image generator and into a 3D model generator.

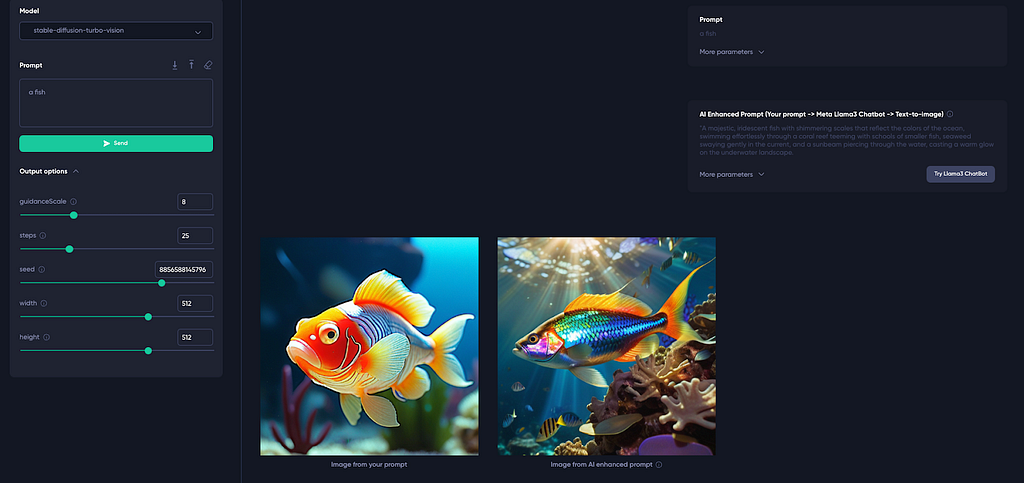

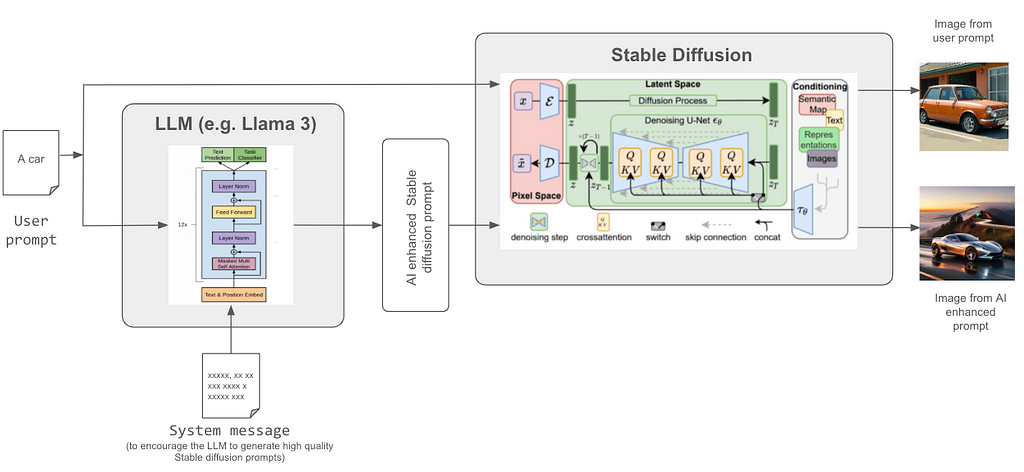

Today, Theta is excited to release an upgrade to its EdgeCloud AI Showcase that adds a new two stage text-to-image model where the original prompt is automatically input into Llama3 LLM to generate an AI enhanced, stable diffusion optimized prompt which is then input the image generation model. For learning purposes, both output images are displayed next to each other along with their prompts so users can see the difference and improve upon them.

Under the hood, an AI model pipeline includes the raw data input from the source, features, outputs, the machine learning model, its parameters, and other prediction outputs. This AI software requires GPU hardware to run and scale ML models as well as tools for data cleaning, preparation, feature engineering, selection, and finally interpreting and utilizing the ML model’s output. This process is made even more complex where data science teams, often with the support of IT and DevOps, create and manage these model pipelines. Ad-hoc maintenance is expensive to do, difficult, and time-consuming.

Theta EdgeCloud is the first hybrid cloud-edge computing platform that can effectively implement machine learning operations (MLOps) to help configure, manage, and support AI pipelines. Stay tuned for more details throughout 2024–25, and in the meantime, have fun playing with EdgeCloud’s new AI showcase.

EdgeCloud: Taking genAI to the Next Level with AI Model Pipelining was originally published in Theta Network on Medium, where people are continuing the conversation by highlighting and responding to this story.

1

0

Управляйте всей своей криптовалютой, NFT и DeFi из одного места

Управляйте всей своей криптовалютой, NFT и DeFi из одного местаБезопасно подключите используемый вами портфель для начала.