Introducing Distributed Verifiable LLM Inference on Theta EdgeCloud: Combining the Strengths of AI…

1

0

Introducing Distributed Verifiable LLM Inference on Theta EdgeCloud: Combining the Strengths of AI and Blockchain

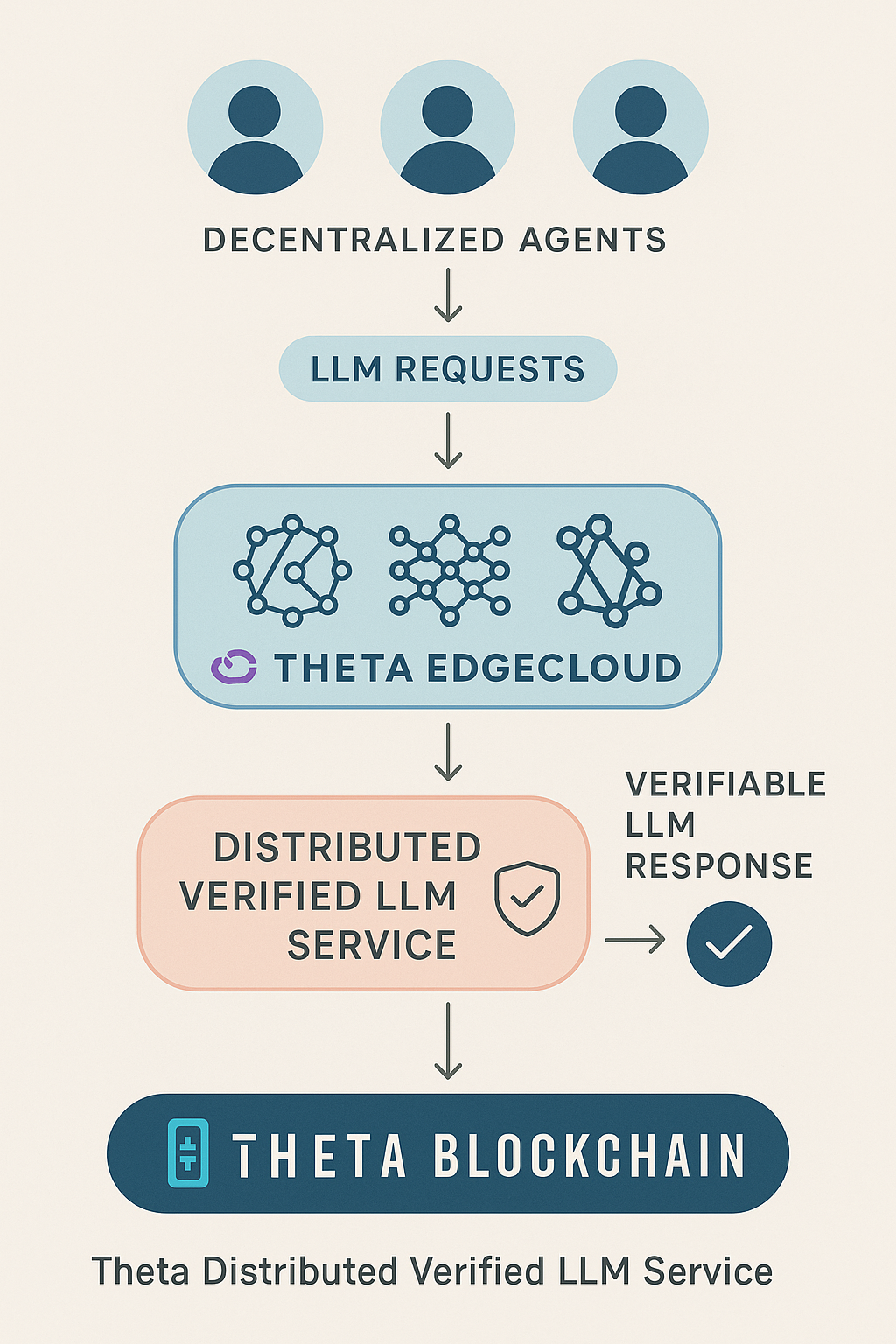

We’re excited to announce the newest feature of Theta EdgeCloud: A large language model (LLM) inference service with distributed verifiability. This new feature will allow chatbot and AI agent to perform trustworthy and independently verifiable LLM inference, powered by blockchain-backed public randomness beacons, for the first time in the industry. This is a unique technology combining the strengths of cutting-edge AI and the trustless, decentralized nature of blockchain.

With this implementation, EdgeCloud is the first and only platform to offer trustless LLM inference for all, among both crypto-native and traditional cloud platforms. For sensitive computational needs in enterprise, academia, and other verticals, EdgeCloud is the only cloud solution that can guarantee the integrity of LLM outputs.

The Idea

Modern AI agents rely heavily on LLMs to generate responses and perform complex tasks. But can you really trust the output? Today, most agent frameworks rely on centralized LLM APIs (e.g., OpenAI) or hardware-enforced security like TEEs (Trusted Execution Environments). These solutions place trust in a centralized provider or closed-source software, which could alter or fabricate results.

The recent release of DeepSeek-V3/R1, the first truly competitive open-source alternative to proprietary LLMs, opens the door to full-stack verification. This breakthrough enables communities to begin moving away from opaque AI APIs toward transparent, inspectable inference workflows.

Building on these advancements, we designed and implemented a Distributed Verifiable Inference system that guarantees that LLM outputs are both reproducible and tamper-proof, even by the service provider (such as Theta Labs for EdgeCloud). By combining open-source AI models, decentralized compute, and blockchain-based verifiability, we’re building a foundation of trust for the next generation of AI agents.

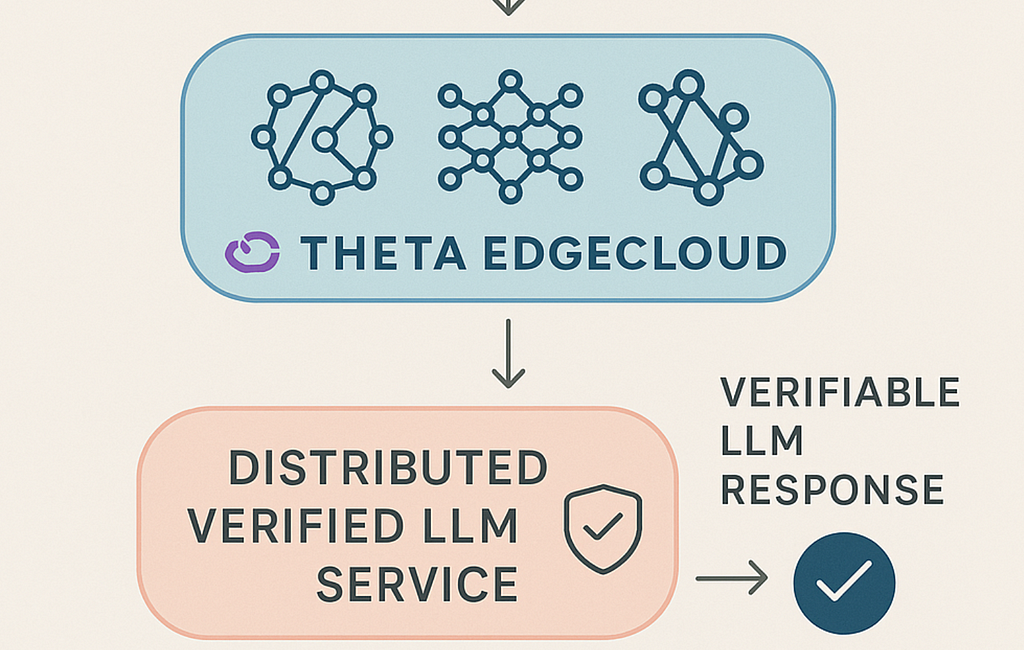

Our Solution

We’re releasing the first industry-grade verifiable LLM inference engine, integrated directly into Theta EdgeCloud. Here’s how it achieves public availability:

- Deterministic Token Probability: The LLM computes the next-token probability distribution given a prompt. Since we’re using open-source models (e.g., DeepSeek R1), anyone can verify this step by running the model locally or on another trusted node.

- Verifiable Sampling: Sampling the next token is usually a non-deterministic process. To make it verifiable, we use a publicly verifiable random seed, inspired by protocols like Ethereum’s RanDAO. With this seed, the sampling becomes pseudo-random and fully reproducible. The final output can be independently validated by any observer.

This two-part design ensures that anyone can use public randomness to verify the output of the LLM given an input. Thus, no party, including the LLM hosting service, can tamper with the model output, providing strong integrity guarantees.

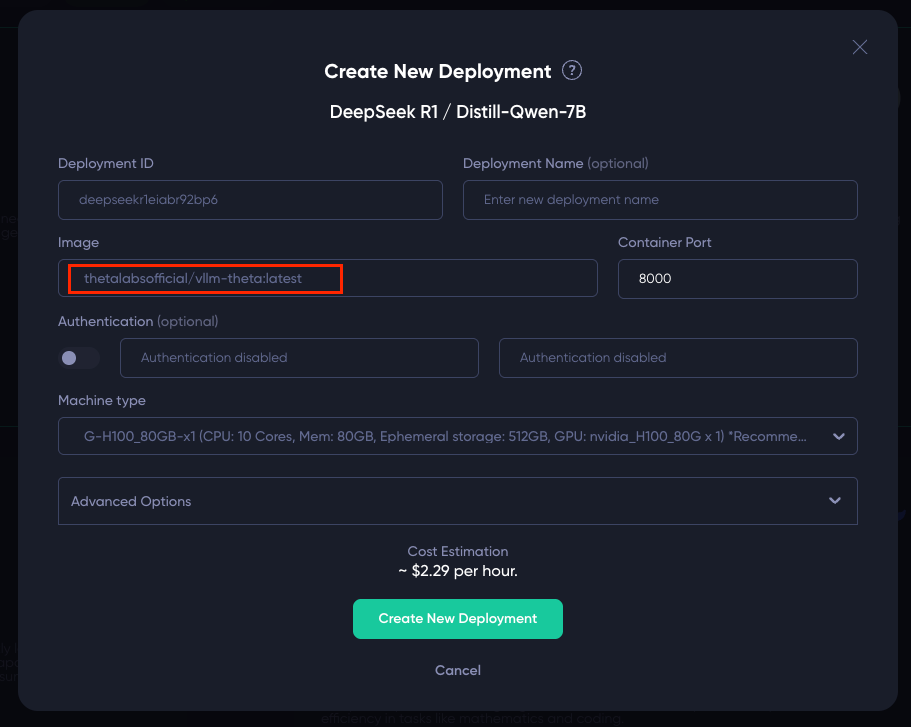

To implement this solution, we enhanced vLLM, one of the most popular industry-grade LLM inference engines, and equipped it with additional components to support public verifiability. We have packaged the implementation into a docker container image and release it publicly on our DockerHub account:

https://hub.docker.com/repository/docker/thetalabsofficial/vllm-theta

We have also updated our standard template for the “DeepSeek R1 / Distill-Qwen-7B” model available on Theta EdgeCloud dedicated model launchpad to use the above container image by default:

Using this container image, the LLM inference result will include the following extra section which contains the public randomness extracted from the Theta Blockchain, which allows users to verify the output of the LLM for the given prompt.

{

…

“theta_verified”: {

“seed”: 2825676771,

“verification_hash”: “aec8beec3306dddb9b9bad32cb90831d0d04132e57b318ebce64fb0f77208cdc”,

“sampling_params”: {

“seed”: 2825676771,

…

},

“block”: {

“hash”: “0xd8ba61b261ddda55520301ee23846a547615c60d29c1c4a893779ba0d53f0656”,

“height”: “30168742”,

“timestamp”: “1749770570”

}

}

On-Chain Publishing (Optional)

For higher-stakes use cases, we envision publishing inference metadata (prompt, distribution, random seed, result) on-chain, where it can be attested to by decentralized guardians or witnesses. This opens the door to auditable and trustless AI services, with potential integrations into DAO tooling, autonomous agents, and DeFi risk engines.

The Future of AI Is Verifiable

As AI agents become more autonomous and decision-making shifts from humans to machines, trust in the LLM inference layer becomes foundational. With these AI agents integrated into all facets of our daily lives and commerce, the ability to verify and trust their outputs and actions will be critical to safety, security, and financial integrity. Our newly released service brings a much-needed layer of transparency, auditability, and security to these AI workflows. With the power of Theta Blockchain/EdgeCloud and open-source innovation, we’re leading the way in making AI not just powerful but provably trustworthy.

Introducing Distributed Verifiable LLM Inference on Theta EdgeCloud: Combining the Strengths of AI… was originally published in Theta Network on Medium, where people are continuing the conversation by highlighting and responding to this story.

1

0

Manage all your crypto, NFT and DeFi from one place

Manage all your crypto, NFT and DeFi from one placeSecurely connect the portfolio you’re using to start.